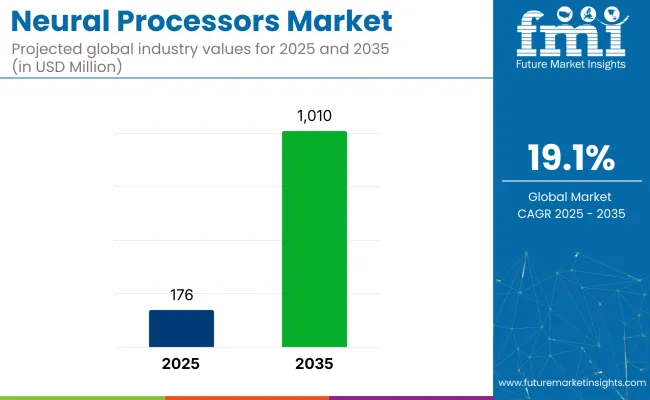

The global neural processors market is projected to increase from USD 176 million in 2025 to USD 1,010 million by 2035, registering a strong CAGR of 19.1%. This surge is being driven by the rising integration of dedicated AI hardware in smartphones, wearables, tablets, smart TVs, autonomous vehicles, and edge computing devices.

Neural processing units (NPUs) are being used to accelerate machine learning inference tasks on-device, reducing latency, boosting power efficiency, and enhancing privacy by minimizing cloud dependency.

In a March 2025 interview with DIGITIMES, Amir Panush, CEO of Ceva, highlighted the company’s strategic focus on edge AI, stating, “Edge AI presents significant industry opportunities and will be a key growth engine for Ceva in the coming years.” This underscores Ceva’s commitment to advancing its neural processor technologies, emphasizing the growing importance of real-time AI applications at the edge for the company’s future growth and success.

The industry holds a specialized share within its parent markets. In the semiconductor market, it accounts for approximately 1-2%, as these processors are a niche segment of the broader semiconductor industry. Within the artificial intelligence (AI) market, the share is around 5-7%, driven by their critical role in accelerating AI computations.

In the machine learning market, the processors represent about 4-6%, as they are essential for running machine learning models efficiently. Within the edge computing market, the share is approximately 3-5%, as neural processors are used for real-time, on-device processing. In the consumer electronics market, their share is around 2-3%, with increasing adoption in devices like smartphones and smart speakers for AI-powered functionalities.

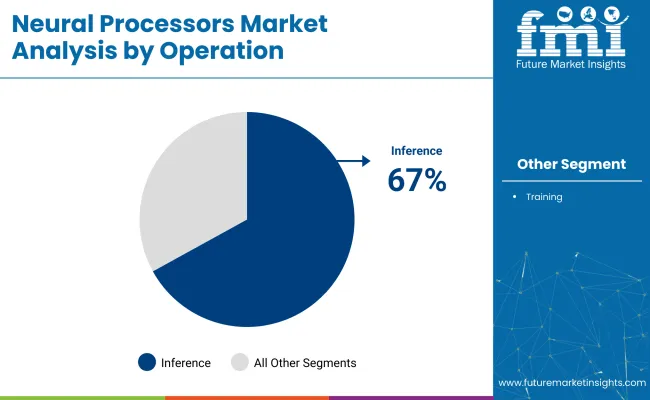

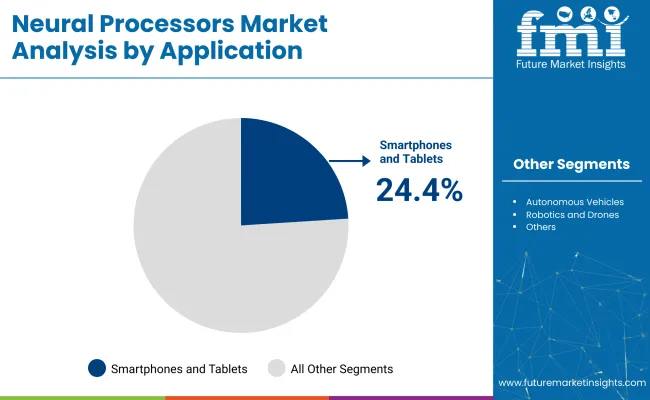

The industry is projected to grow, driven by inference-focused NPUs for edge AI and device-level intelligence. Inference operations will dominate with 67% industry share in 2025, while smartphones and tablets will lead the application segment with 24.4% industry share.

Inference operations are expected to account for 67% of the neural processor industry in 2025, driven by the growing need for efficient AI model deployment at the edge. These tasks, such as facial recognition, speech-to-text, and noise suppression, are increasingly executed on-device to enhance privacy and reduce latency, eliminating the need for cloud infrastructure.

Chipmakers like NVIDIA, Qualcomm, and Intel are focusing on designing NPUs specialized for low-power inference applications across consumer and industrial sectors. For instance, Arm’s Ethos-U65 microNPU delivers sub-milliwatt AI performance for IoT endpoints, expanding edge deployment in smart cities and wearables. Cloud interoperability enables local inference while syncing with centralized models, solidifying inference as a core component of NPU design strategies.

Smartphones and tablets are projected to capture 24.4% of the industry in 2025, with growing integration of NPUs to power AI-driven features. These devices leverage NPUs for applications such as real-time language processing, intelligent photography, and device customization.

For example, Google’s Tensor G3 chip introduced in 2024 brought a triple-core TPU to Pixel smartphones, enhancing language processing and generative image editing. Major players like Apple, Samsung, and Xiaomi are continuously improving NPU performance with each hardware cycle.

According to Counterpoint Research, 89% of smartphones released in Q1 2025 are expected to include dedicated AI hardware, highlighting the increasing reliance on NPUs in mobile platforms. Tablets used for education and productivity also benefit from NPUs for enhanced UI responsiveness and offline AI capabilities.

The neural processors market is expanding due to the growing demand for edge AI and on-device intelligence, especially in industries like smartphones and automotive. However, high development costs, architectural fragmentation, and geopolitical tensions are limiting industry scalability, particularly for new entrants and general-purpose devices.

Edge AI Growth and On-Device Intelligence are Fueling Neural Processor Adoption

The demand for neural processors is growing rapidly due to the increasing need for on-device artificial intelligence (AI) across industries like smartphones, automotive, healthcare, and consumer electronics. Edge computing is replacing cloud-based AI, enabling faster, real-time processing and enhanced privacy.

Neural Processing Units (NPUs) are integrated into chipsets to support features such as face unlock, voice recognition, predictive maintenance, and autonomous navigation. Companies like Apple, Google, and Huawei are leading innovations, and as 5G and IoT ecosystems mature, neural processors are becoming essential for seamless, low-latency AI applications.

High Barriers to Entry and Architectural Fragmentation Limit Industry Scalability

Despite strong demand, the industry faces significant barriers to scalability due to high development costs and architectural fragmentation. Designing NPUs requires specialized knowledge of chip architecture and deep learning, which is concentrated in leading semiconductor firms. Startups struggle with the high cost of custom chip development and lack of standardized software frameworks.

Moreover, the industry’s fragmentation into different use cases-such as training versus inference-creates compatibility issues, slowing adoption. Geopolitical tensions, including export restrictions on advanced chips, further limit industry access.

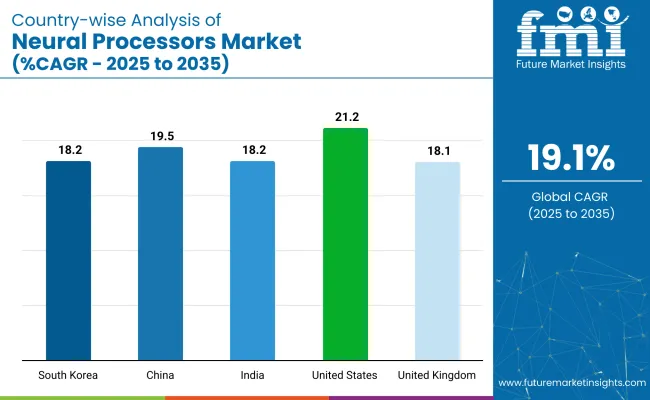

| Countries | CAGR (2025-2035) |

|---|---|

| United States | 21.2% |

| China | 19.5% |

| India | 18.2% |

| South Korea | 18.2% |

| United Kingdom | 18.1% |

The industry demand is projected to rise at a 19.1% CAGR from 2025 to 2035. Of the five profiled countries out of 40 covered, the United States leads at 21.2%, followed by China at 19.5% and India, South Korea, and the United Kingdom all at 18.2% or 18.1%. These rates translate to a growth premium of +11% for the United States, +2% for China, and -5% for India, South Korea, and the United Kingdom versus the baseline.

Divergence reflects local catalysts: strong investment in AI and machine learning technologies in the United States, rapid adoption of neural processors in China, and an increasing focus on AI research and development in India, South Korea, and the UK

The industry in the United States is set to record a CAGR of 21.2% through 2035. Expansion is being powered by national leadership in AI algorithm development, domestic semiconductor capacity, and robust demand from defense, healthcare, and automotive AI applications.

Firms such as NVIDIA, AMD, Intel, and Google are commercializing NPUs for large language models, edge inference, and cloud AI workloads. Federal support through the CHIPS Act and AI R&D grants is sustaining innovation at both fabless and integrated device manufacturers. Integration of NPUs into autonomous navigation, surgical robotics, and smart defense platforms reinforces the USA lead in high-performance neural compute.

Sales of neural processors in the United Kingdom are projected to grow at a CAGR of 18.1% through 2035. Growth is anchored in chip-level AI innovation from startups and academic spinouts. Graphcore and Arm are advancing inference-first designs with high energy efficiency and dynamic workload adaptation.

The UK AI Strategy is channeling funding into compute hardware R&D, with Cambridge and Oxford leading processor architecture studies. NPUs are gaining adoption across NHS digital health pilots, financial algorithmic risk systems, and MOD AI readiness programs. The UK maintains a stronghold in Europe for custom AI silicon and low-latency edge chip deployment.

Demand for neural processors in China is estimated to grow at a CAGR of 19.5% through 2035. Domestic chipmakers are scaling AI accelerators for robotics, surveillance, and autonomous logistics under national tech self-reliance policies. Cambricon, Huawei, and Alibaba are releasing chipsets tailored for AI inference workloads integrated with 5G and cloud platforms.

Fabrication capacity is expanding under MIIT guidelines, while local startups are developing NPU IP optimized for language processing, smart city infrastructure, and real-time video analytics. China is positioned as a volume producer and strategic user of AI compute solutions.

The industry in India is projected to grow at a CAGR of 18.2% through 2035. Edge AI adoption across finance, mobility, and agriculture is spurring demand for lightweight neural chips. Indian IT firms and startups are collaborating with global silicon providers to localize low-power inference accelerators.

Government-backed semiconductor programs and the India AI Mission are prioritizing regional chip design and embedded AI training platforms. Use cases span from speech recognition in local languages to medical image analysis in rural health programs. With policy support and rising R&D depth, India is becoming a regional base for affordable, scalable NPU production.

Sales of neural processors in South Korea are forecasted to grow at a CAGR of 18.2% through 2035. Major electronics players are embedding AI accelerators into consumer, automotive, and wearable platforms. Samsung and SK hynix are developing NPU-integrated SoCs with compute-memory fusion, enhancing real-time inference in smartphones and XR devices.

Government R&D support and digital export targets are expanding AI chip deployment in gaming, surveillance, and EV ecosystems. South Korea’s role in producing CE-certified smart semiconductors is growing, with export volumes rising in Asia and the EU.

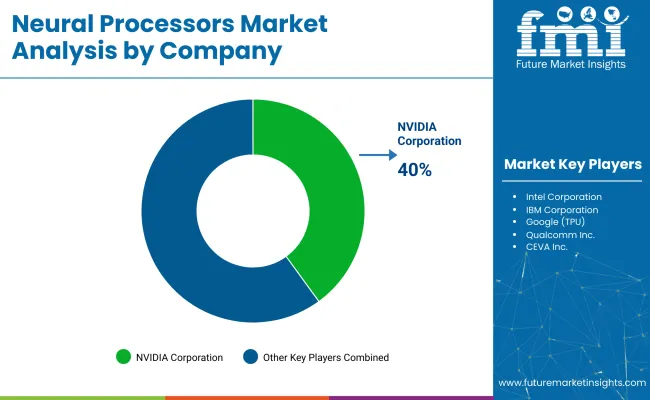

The global industry features a competitive landscape with dominant players, key players, and emerging players. Dominant players such as NVIDIA Corporation, Intel Corporation, and Google LLC lead the industry with extensive product portfolios, strong R&D capabilities, and robust distribution networks across AI, machine learning, and data center sectors.

Key players including IBM Corporation, Qualcomm Inc., and CEVA Inc. offer specialized solutions tailored to specific applications and regional industries. Emerging players, such as BrainChip Holdings Ltd., Graphcore Limited, and Teradeep Inc., focus on innovative technologies and cost-effective solutions, expanding their presence in the global industry.

Recent Neural Processor Industry News

| Report Attributes | Details |

|---|---|

| Industry Size (2025) | USD 176 million |

| Projected Industry Size (2035) | USD 1,010 million |

| CAGR (2025 to 2035) | 19.1% |

| Base Year for Estimation | 2024 |

| Historical Period | 2020 to 2024 |

| Projections Period | 2025 to 2035 |

| Quantitative Units | USD million for value, units for volume |

| Operation Outlook (Segment 1) | Training, Inference |

| Application Outlook (Segment 2) | Smartphones and Tablets, Autonomous Vehicles, Robotics and Drones, Healthcare and Medical Devices, Smart Home Devices and IoT, Cloud and Data Center AI, Industrial Automation, Others |

| Regions Covered | North America, Europe, Asia Pacific, Latin America, Middle East & Africa |

| Countries Covered | United States, Canada, Mexico, United Kingdom, Germany, France, China, Japan, India, South Korea, Australia, Brazil, Saudi Arabia, United Arab Emirates, South Africa |

| Key Players influencing the Industry | Intel Corporation, IBM Corporation, Google (TPU), Qualcomm Inc., CEVA Inc., NVIDIA Corporation, Advanced Micro Devices (AMD), BrainChip Holdings Ltd., Graphcore Limited |

| Additional Attributes | Dollar sales by operation type and AI application; Surge in demand from autonomous systems and edge devices; Expansion of TPU and GPU chipsets for inference workloads; AI model complexity driving custom neural silicon; Regional AI semiconductor policy developments; Cloud AI chip deployment patterns and edge computing trends |

The industry is segmented by operation into training and inference.

Based on application, the industry is categorized into smartphones and tablets, autonomous vehicles, robotics and drones, healthcare and medical devices, smart home devices and IoT, cloud and data center AI, industrial automation, and others.

Geographically, the industry is analyzed across North America, Europe, Asia Pacific, Latin America, and the Middle East & Africa.

The industry size is projected to be USD 176 million in 2025 and USD 1,010 million by 2035.

The expected CAGR is 19.1% from 2025 to 2035.

NVIDIA Corporation is the leading company in the industry with a 40% industry share.

Inference operations are expected to dominate with a 67% industry share in 2025.

The United States is expected to grow the fastest with a projected CAGR of 21.2% from 2025 to 2035.

Our Research Products

The "Full Research Suite" delivers actionable market intel, deep dives on markets or technologies, so clients act faster, cut risk, and unlock growth.

The Leaderboard benchmarks and ranks top vendors, classifying them as Established Leaders, Leading Challengers, or Disruptors & Challengers.

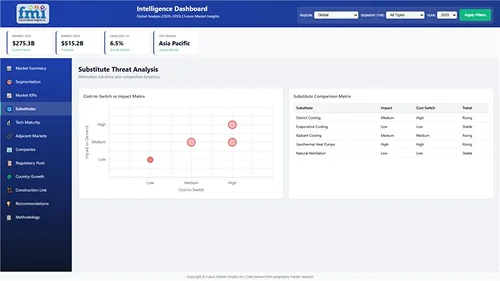

Locates where complements amplify value and substitutes erode it, forecasting net impact by horizon

We deliver granular, decision-grade intel: market sizing, 5-year forecasts, pricing, adoption, usage, revenue, and operational KPIs—plus competitor tracking, regulation, and value chains—across 60 countries broadly.

Spot the shifts before they hit your P&L. We track inflection points, adoption curves, pricing moves, and ecosystem plays to show where demand is heading, why it is changing, and what to do next across high-growth markets and disruptive tech

Real-time reads of user behavior. We track shifting priorities, perceptions of today’s and next-gen services, and provider experience, then pace how fast tech moves from trial to adoption, blending buyer, consumer, and channel inputs with social signals (#WhySwitch, #UX).

Partner with our analyst team to build a custom report designed around your business priorities. From analysing market trends to assessing competitors or crafting bespoke datasets, we tailor insights to your needs.

Supplier Intelligence

Discovery & Profiling

Capacity & Footprint

Performance & Risk

Compliance & Governance

Commercial Readiness

Who Supplies Whom

Scorecards & Shortlists

Playbooks & Docs

Category Intelligence

Definition & Scope

Demand & Use Cases

Cost Drivers

Market Structure

Supply Chain Map

Trade & Policy

Operating Norms

Deliverables

Buyer Intelligence

Account Basics

Spend & Scope

Procurement Model

Vendor Requirements

Terms & Policies

Entry Strategy

Pain Points & Triggers

Outputs

Pricing Analysis

Benchmarks

Trends

Should-Cost

Indexation

Landed Cost

Commercial Terms

Deliverables

Brand Analysis

Positioning & Value Prop

Share & Presence

Customer Evidence

Go-to-Market

Digital & Reputation

Compliance & Trust

KPIs & Gaps

Outputs

Full Research Suite comprises of:

Market outlook & trends analysis

Interviews & case studies

Strategic recommendations

Vendor profiles & capabilities analysis

5-year forecasts

8 regions and 60+ country-level data splits

Market segment data splits

12 months of continuous data updates

DELIVERED AS:

PDF EXCEL ONLINE

Neural Network Software Market

Digital Signal Processors Market

Commercial Food Processors Market

Western Blotting Processors Market Trends and Forecast 2025 to 2035

Telematics and Connectivity Processors Market

Thank you!

You will receive an email from our Business Development Manager. Please be sure to check your SPAM/JUNK folder too.

Chat With

MaRIA