Artificial‑intelligence tools have become the default answer to many business problems, from drafting emails to generating code. ChatGPT and its siblings now draft legal briefs, design websites and debug software. In parallel, a hardware arms race has erupted between Nvidia, AMD and Google to supply the graphics processing units (GPUs) and TPUs that power those models.

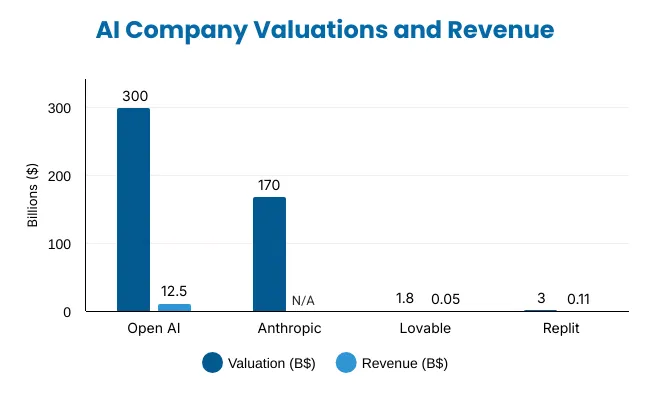

Venture capitalists are chasing astronomical valuations while enterprise buyers struggle to understand where the moat lies. Let’s examine how leading players, OpenAI’s ChatGPT, Lovable.dev, Replit, Anthropic’s Claude and Nvidia do and do not form an ecosystem.

First and foremost, the term “ecosystem” is often misused.

An ecosystem implies symbiotic relationships where organisms depend on one another for survival.

In the AI industry, the dependency is not just technical models require GPUs and cloud infrastructure but also economic. Large language model (LLM) providers depend on investors to fund training runs costing hundreds of millions of dollars and on regulators to allow data accumulation. At the same time, lower‑tier startups often build on top of APIs offered by larger players, risking commodification.

Modern generative AI models are extremely compute‑intensive. Unlike traditional software where performance improvements follow Moore’s law, increasing model capability by one order of magnitude often requires much more than a ten‑fold increase in floating‑point operations. The American Affairs Institute points out that “roughly exponential with capability; few firms can afford frontier training.” This is not an academic curiosity: only a handful of companies have the capital, data centers and research talent necessary to train the largest models.

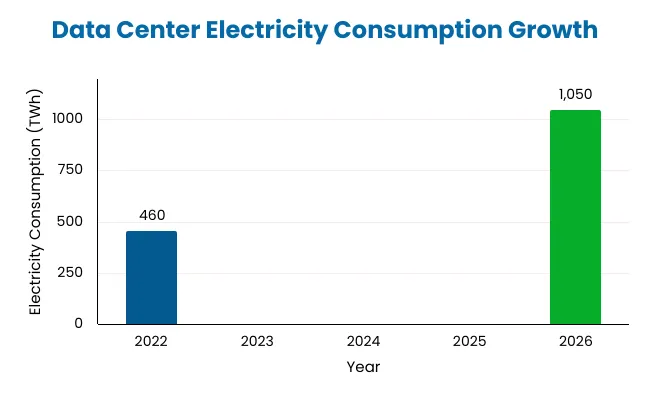

Training these systems consumes staggering amounts of energy. MIT researchers note that generative AI training clusters draw seven to eight times more electricity than conventional computing workloads. Data‑center electricity consumption reached 460 TWh in 2022 and could reach 1,050 TWh by 2026, roughly equivalent to the electricity consumption of Japan.

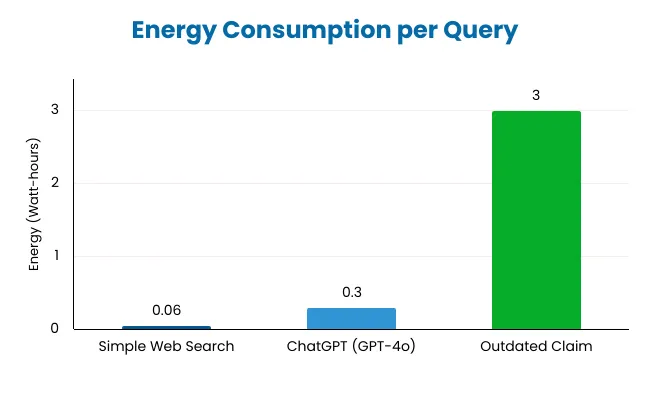

As models scale, individual inference requests also require more power. A widely circulated claim suggests that each ChatGPT query uses about 3 watt‑hours. Researchers at EpochAI show this is an overestimate; a typical ChatGPT query consumes about 0.3 watt‑hours, roughly one‑tenth of the popular estimate. Nonetheless, even 0.3 watt‑hours is about five times the energy of a conventional search engine query. The chart below compares these numbers and highlights the misconceptions around energy use.

Energy use is not the only environmental issue. Cooling the chips often requires water; MIT reports that some data centers consume two liters of water for every kilowatt‑hour of electricity. Expanding AI models thus carries non‑trivial environmental and social costs, which receive far less attention than glossy product demos.

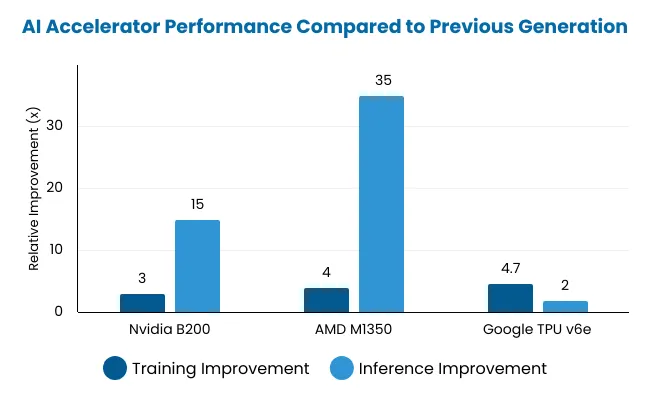

The compute requirement creates a moat that is both technical and economic. LLM providers need access to the latest hardware; thus, they are beholden to chip manufacturers and cloud platforms. A TS2.Tech comparison of new accelerators shows the scale of the engineering leap. Nvidia’s Blackwell B200 architecture delivers three times faster training and up to 15× faster inference than its predecessors. AMD’s MI350 chip goes further, offering four times higher AI compute and 35× faster inference. Google’s TPU v6e provides a 4.7× jump in peak compute and double memory bandwidth.

Because these chips are expensive and in short supply, only well‑capitalized firms can secure enough to train frontier models. The result is a winner‑takes‑most dynamic. Companies that cannot afford to train their own models must build on top of APIs from those that can, making them vulnerable “wrappers” whose businesses could be replaced by native platform.

Compute is thus the gravitational force around which this ecosystem orbits. Without GPUs, popular AI tools including ChatGPT, Lovable, Replit and Claude could not exist. Nvidia’s dominance in AI hardware gives it pricing power and the ability to direct roadmaps for its ecosystem partners. Yet the relationship is not one‑sided: the explosive demand for generative AI massively boosts Nvidia’s revenue, its data‑center sales reached $26.3 billion in a single quarter, and encourages further innovation.

Nvidia: The Hardware Backbone

The entire AI boom runs on a hardware foundation dominated by Nvidia. In March 2024 the company unveiled its Blackwell platform, boasting chiplets, 180 GB of HBM3e memory delivering 8 TB/s bandwidth and support for low‑precision FP4/FP6 operations. Nvidia claims Blackwell can reduce inference cost and energy by up to 25× compared with the previous generations. CEO Jensen Huang framed the product as “the engine that powers the next wave of AI industrial revolution,” noting that companies from cloud providers to chip designers plan to adopt Blackwell.

Financial results underline Nvidia’s importance. In its fiscal 2025 second quarter, the company reported $30 billion in revenue, with $26.3 billion coming from the data‑center segment, up 122 % year‑over‑year. Demand for the existing Hopper architecture remains strong, and anticipation for Blackwell has been described as “incredible”. Nvidia thus sits at the heart of the AI ecosystem: its chips power OpenAI, Lovable, Replit and Anthropic; in return, these software platforms drive almost insatiable demand for Nvidia’s hardware.

Valuations, revenue and the VC boom

The valuations and funding rounds of these companies highlight how capital markets view AI. The chart below compares company valuations and their annualized revenue. OpenAI leads with a USD 300 billion valuation and USD 12 13 billion in revenue (Aug 1, 2025; revenue annualized/run-rate), while Anthropic’s valuation is rapidly approaching that territory despite limited disclosed revenue. Lovable’s revenues ($50 million) and Replit’s revenues ($106 million) pale by comparison, yet both command unicorn valuations.

OpenAI’s ability to charge for premium subscriptions and enterprise products gives investors confidence that ChatGPT has a path to profitability. Anthropic’s skyrocketing valuation demonstrates the premium placed on teams that can build competitive LLMs. Lovable and Replit are valued not on current revenue but on the belief that democratized coding will unlock vast markets.

VC interest reflects a broader financial narrative. Technology investors are searching for the next platform shift and view generative AI as comparable to the birth of the internet. However, the capital intensity of the sector means that only companies backed by deep pockets can train large models. For startups, raising money is both necessary and risky: valuations can collapse if technical progress stalls or regulatory headwinds emerge.

Wall Street’s view

Public markets have thus far limited exposure to pure‑play generative AI because most companies remain private. Nvidia is the exception. Its share price has multiplied as investors bet on sustained demand for AI GPUs, and it has become one of the world’s most valuable firms. Analysts treat Nvidia’s dominance as analogous to Intel in the PC era. Yet this concentration also exposes the ecosystem to a single supplier: any manufacturing issue or export restriction could cascade through all downstream players.

Wall Street is more cautious about software providers. The fear is that many of today’s AI applications are little more than “wrappers” around language‑model APIs. If the base models become commoditized, the value of these wrappers could plummet. Private investors often justify high valuations by pointing to network effects and brand, but public markets may demand evidence of durable competitive advantage.

What is possible today

The capabilities of generative AI are already remarkable. Models like GPT‑4o and Claude 3.5 can draft essays, write code, answer questions, and process images and audio. OpenAI research suggests that 80 % of the USA workforce could see at least 10 % of their tasks impacted by language models, and 19 % could see at least half of their tasks affected. In coding, Replit’s internal metrics show that the AI agent can autonomously build full applications, fix bugs and deploy to the web. Lovable claims that non‑programmers can describe an app and have the platform create a production‑grade version in minutes. Claude’s long context window and artifacts feature allow teams to collaborate on complex documents inside the model.

In hardware, new architectures drastically reduce inference costs and enable on‑device AI. Nvidia’s Blackwell boasts low‑precision FP4 operations, while AMD’s MI350 and Google’s TPU v6e offer similar leaps. These improvements mean that tasks previously reserved for cloud servers may run on smaller edge devices, creating new business models and privacy benefits.

Limits and unsolved challenges

Despite the hype, the current ecosystem has significant limitations. Energy and water consumption raise environmental concerns. The scaling laws that enable bigger models come with diminishing returns; training costs rise exponentially, but improvements in accuracy or reasoning do not always keep pace. There is also a lingering reliability problem: language models hallucinate, misattribute facts and sometimes produce unsafe outputs. Anthropic emphasises safety and alignment, yet the field lacks consensus on best practices.

Regulatory risk is growing. Governments are proposing rules around data privacy, AI safety and access to compute. If regulators restrict access to training data or GPUs, the ecosystem could fragment. Moreover, the concentration of compute within a few tech giants risks reinforcing existing monopolies and excluding smaller players. Critics argue that the AI boom may simply entrench incumbents rather than democratize technology.

Another challenge is platform dependency. Lovable and Replit rely on API access to models they do not control. If OpenAI or Anthropic change terms or raise prices, these startups could see margins eroded. VC hype may obscure this vulnerability, but long‑term sustainability demands either owning the model or building defensible features beyond simple chat interfaces.

The answer is nuanced

From a technical standpoint, the companies are deeply intertwined: ChatGPT, Lovable and Replit all depend on massive compute clusters typically powered by Nvidia hardware; Lovable and Replit often wrap the models produced by OpenAI or Anthropic; Claude offers API endpoints that third parties use to build new applications. This interdependence justifies the term ecosystem. Additionally, investors and media treat them collectively as representatives of the generative AI boom, amplifying the sense of shared destiny.

However, from a strategic and economic perspective, their fates diverge. OpenAI and Anthropic have direct access to training data, research talent and compute budgets that are out of reach for startups. Their moats are based on scale and brand. Lovable and Replit operate one layer higher, providing user‑friendly interfaces for building software but relying on third‑party models. They risk commoditization and must differentiate through workflow integration, design and community. Nvidia sits apart as the picks‑and‑shovels supplier; it profits regardless of which model wins. The dynamic resembles the gold rush: miners may go bust, but the providers of shovels and in this case, GPUs collect steady revenue.

Finally, the ecosystem analogy masks competitive tensions. OpenAI and Anthropic may collaborate with startups but ultimately compete for the same enterprise customers. Nvidia sells to all, but with limited supply, it must allocate GPUs strategically. As more entrants like Google, Meta and Amazon train their own models, the market could fragment further. In other words, there is no guarantee that one cohesive AI ecosystem will persist. Instead, expect multiple overlapping ecosystems defined by hardware supply chains, regulatory regimes and language‑model lineages.

The relationship between ChatGPT, Lovable, Replit, Claude and Nvidia is both synergistic and contested. They are connected by compute: without hardware advances, there would be no large language models; without models, there would be no demand for specialized chips.

Venture capital inflates valuations to nose‑bleed levels, betting that these products will transform labour markets and unlock trillions in productivity. Yet the realities of energy consumption, the scarcity of GPUs, the risk of commoditization and the uncertain regulatory environment caution against simplistic narratives. In the coming years, the winners will be those who either control the base models and data or provide indispensable infrastructure, rather than those who merely wrap existing APIs. As the ecosystem evolves, critical analysis, grounded in first principles, aware of physical limits and skeptical of hype is essential. Only then can businesses and investors navigate the AI revolution with clear eyes.

Chat With

MaRIA